Augmenting SecDevOps Pipelines with AI Agents on Azure (part 2/2)

What we’re augmenting (in plain terms)

We’ve been hands-on with Azure DevOps and Azure cloud long enough to know this truth: our pipelines already produce the answers we need, but they hide them in logs, metrics, and alerts spread across tools. AI agents help us stitch that story together. In this post we share a simple, concrete pattern we use at JPSoftWorks to augment SecDevOps on Azure with agents that watch, correlate, and advise—without getting in the team’s way.

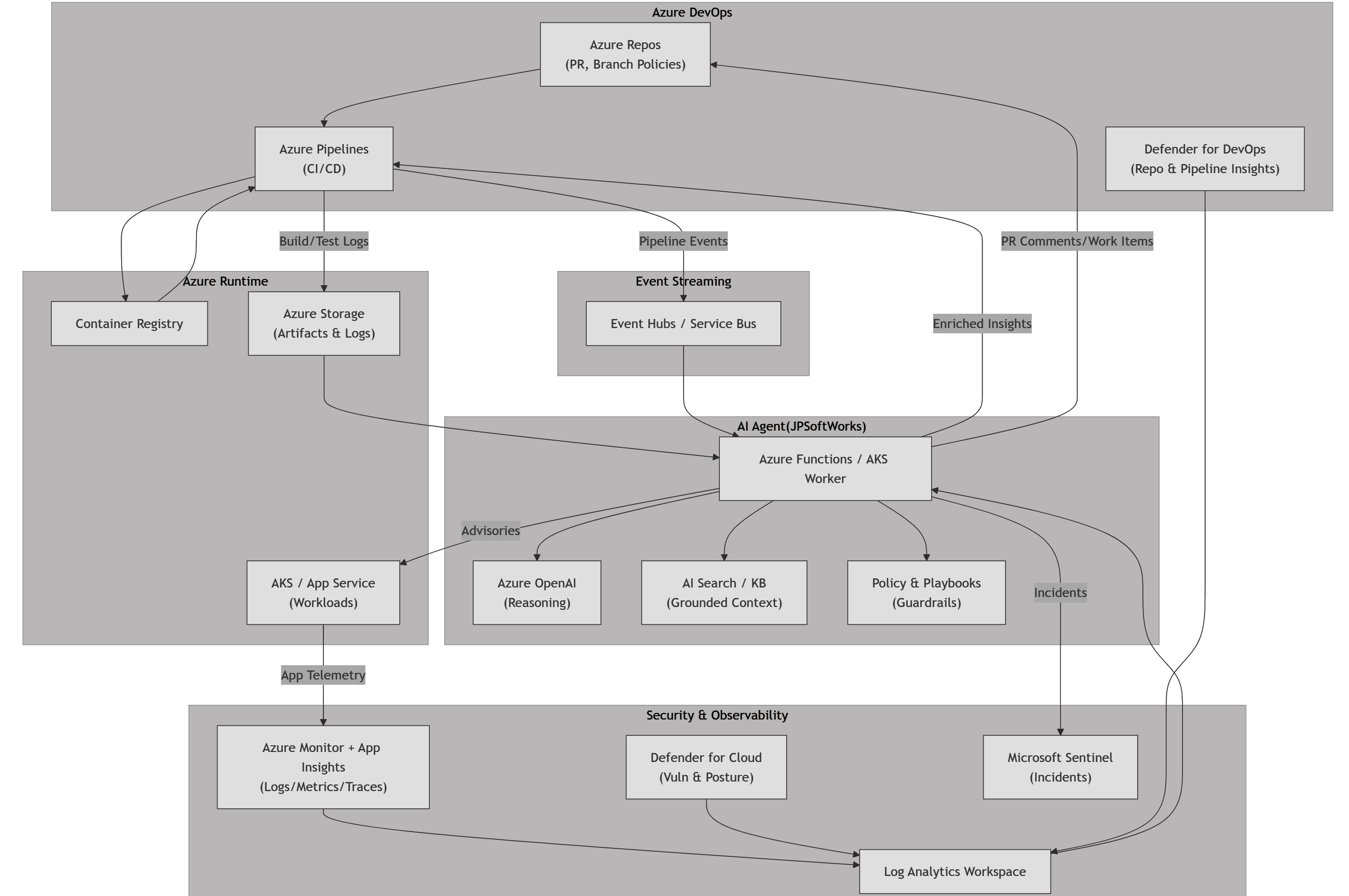

Where the agent plugs in

Our baseline looks familiar: Azure Repos or GitHub, Azure Pipelines for CI/CD, security gates for SCA/SAST/DAST, and deploys landing in Azure Kubernetes Service (AKS) or Azure App Service. Observability lives in Azure Monitor and Application Insights, with Defender for Cloud and Microsoft Defender for DevOps feeding risk signals. That stack is solid, but people still drown in information. The agent layer flips the script by doing three things for us:

- Enrich: Capture pipeline outputs, logs, and security findings; add context like recent commits, dependency diffs, infra drift, and change tickets.

- Correlate: Link symptoms to likely causes. For example, tie a spike in time-to-deploy to a newly added Helm hook, or associate a rise in false-positive vulns with a scanner rule update.

- Advise: Propose actions that fit our guardrails. That might be auto-opening a PR to tighten an Azure Policy, suggesting a change in test parallelism, or escalating a real incident to the right service team channel.

We place the AI agent on the event path that already exists in Azure:

- Ingest: Azure Pipelines publishes build/test artifacts and logs to Azure Blob Storage; telemetry flows into Log Analytics via Azure Monitor and Application Insights. Security signals arrive from Defender for Cloud and Defender for DevOps.

- Bus: We mirror key events to Event Hubs (or Service Bus) to keep the agent decoupled from the pipeline.

- Agent runtime: A lightweight service on Azure Functions or a container in AKS subscribes to those events.

- Reasoning + policies: The agent uses our in-house prompts, rules, and playbooks, and when we need LLM capabilities we call Azure OpenAI with strict grounding against our own knowledge base in Azure AI Search or a private storage account.

- Action: The agent writes comments back to Azure DevOps (PR threads, pipeline summaries), creates Git branches with fixes, files ADO work items, or posts annotated alerts to Teams. For runtime issues it can open a Sentinel incident with enriched evidence.

What it changes for observability

- From raw logs to narratives: Instead of five alerts in five tools, we get one narrative: “Deployment slowed by 34% since Aug 20 after adding OWASP ZAP to release stage; consider parallelizing scans or scoping targets.”

- Trend awareness: The agent tracks moving baselines. When build time creeps 5% weekly for three weeks, we hear about it before we feel it.

- Security prioritization: Findings get ranked by exploitability, exposure, and blast radius. A critical on a dead code path doesn’t jump the queue over a medium on an internet-facing service.

- Feedback loops: The agent tests its own advice. If shortening log retention hurts forensics, it rolls back the recommendation and explains why.

A simple Azure blueprint

Here’s a minimal diagram we use to explain the pattern to teams. (click to expand)

How we run it safely

- Data boundaries first: The agent only sees what it must. We scope Log Analytics queries per service, and we route secrets through Key Vault. LLM calls are private, with prompt/response logging scrubbed.

- Explain, then act: Advice is default; actions require either a runbook with pre-approved change types or explicit human approval in the PR or pipeline check.

- Versioned knowledge: The playbooks that guide the agent are versioned like code and validated in non-prod pipelines.

- Cost controls: We batch events, use Retrieval-Augmented Generation only when needed, and cache prior reasoning for repeated issues.

Day-one use cases

- Pipeline drift detector: Alert when stage order, conditions, or time budgets change materially; open a PR to restore standards.

- Noise reducer for security scans: Group duplicates, suppress known-benign families, and re-rank by exposure paths discovered from Azure Resource Graph.

- SLO watchdog: Watch error budgets from App Insights; if burn rate exceeds policy, the agent pauses risky deploys and proposes a rollback with links to failing traces.

- Dependency risk coach: When a new package lands, the agent checks support windows and transitive vulns, then proposes a safer alternative or pin strategy.

Bottom line: we keep our existing Azure stack. We just add an agent that listens harder, connects dots faster, and nudges us toward better decisions.